Training AI Sustainably Depends on Where and How

In the rapidly advancing world of artificial intelligence (AI), the focus on sustainability is becoming increasingly paramount. Building eco-friendly AI requires intent at each step, not just in data centers but across the entire supply chain. The environmental impact of AI is significant and cannot be ignored. As such, companies and organizations must consider the implications of their AI projects and work towards more sustainable practices.

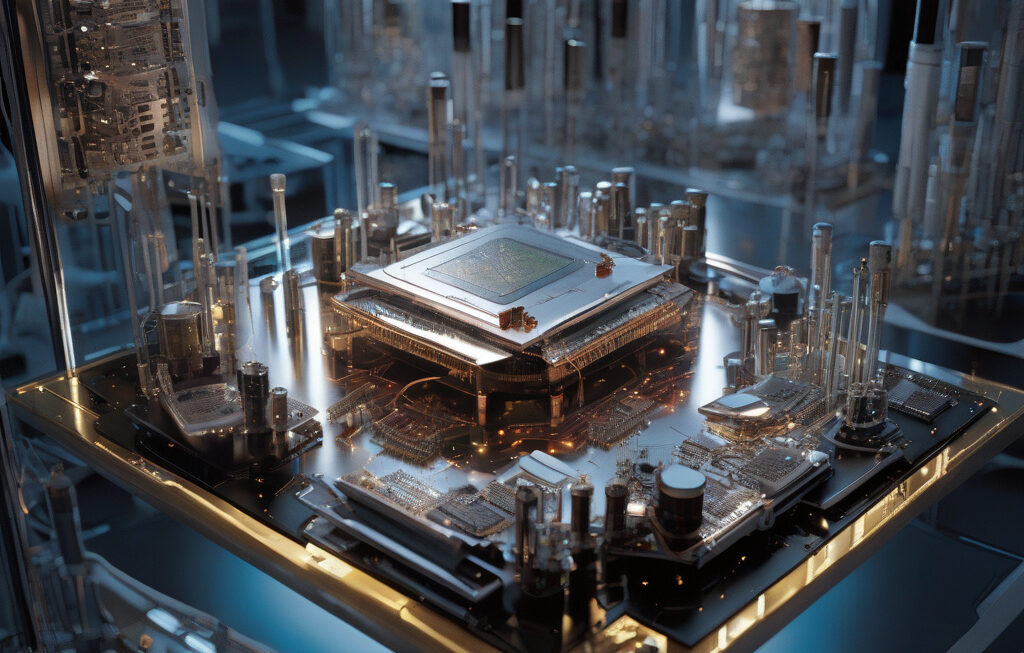

One of the key areas where sustainability is crucial in AI development is in the training process. AI models are trained using vast amounts of data, which requires substantial computational power. This, in turn, leads to high energy consumption, resulting in a significant carbon footprint. Therefore, where and how AI models are trained play a crucial role in determining their sustainability.

Data centers, where the bulk of AI training takes place, are known for their high energy consumption. In fact, data centers account for a substantial portion of the world’s electricity usage. To train AI sustainably, companies must prioritize energy-efficient practices in their data centers. This can include using renewable energy sources, optimizing cooling systems, and implementing energy-efficient hardware.

Moreover, the location of data centers also matters. In regions where electricity is generated from renewable sources, such as solar or wind power, training AI models can be more sustainable compared to regions that rely on fossil fuels. By strategically locating data centers in areas with access to renewable energy, companies can significantly reduce the carbon footprint of their AI projects.

Beyond data centers, the entire supply chain of AI development must be considered for sustainability. This includes the manufacturing of AI hardware, the sourcing of components, and the disposal of electronic waste. Companies must work with suppliers who adhere to sustainable practices and prioritize recycling and proper disposal of electronic components.

Furthermore, the design of AI models themselves can impact sustainability. By creating more efficient algorithms that require less computational power, developers can reduce the energy consumption of AI systems. Additionally, implementing techniques like model compression and quantization can further improve the efficiency of AI models, making them more sustainable in the long run.

In conclusion, training AI sustainably depends on where and how it is done. Companies must take a holistic approach to AI development, considering the environmental impact at every stage of the process. By focusing on energy-efficient practices, renewable energy sources, and sustainable supply chain management, organizations can build AI systems that are not only cutting-edge but also environmentally friendly.

sustainability, AI training, eco-friendly practices, data centers, renewable energy