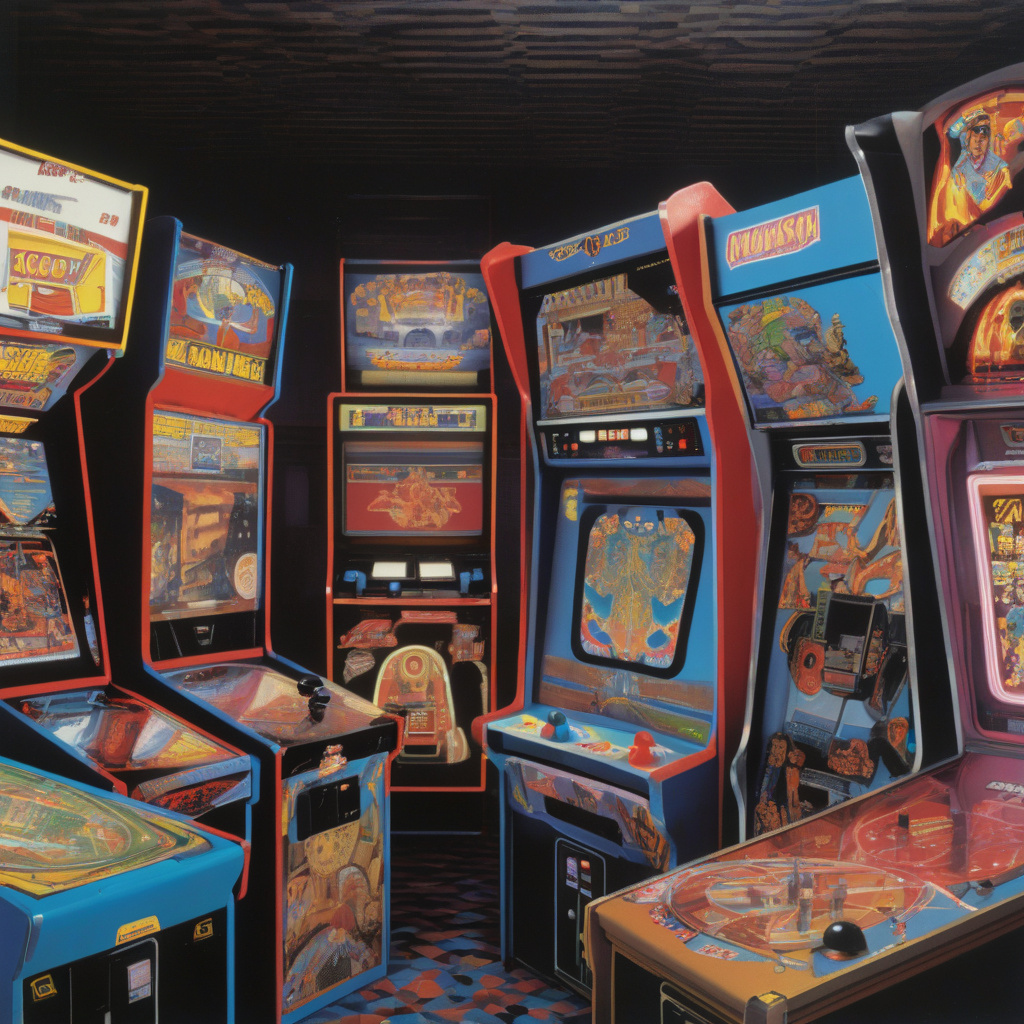

From Arcade Graphics to Powering the Smartest Machines: The Evolution of GPUs

The graphics processing unit, better known today as the GPU, did not begin as a powerhouse component driving artificial intelligence and machine learning algorithms. In the early days, GPUs were predominantly utilized to render graphics for video games in arcade machines and personal computers. However, the capabilities of GPUs have significantly evolved over the years, transforming them from mere graphic enhancers to essential components in some of the world’s most advanced technologies.

The journey of GPUs from arcade graphics to running complex algorithms on supercomputers and data centers is a remarkable testament to technological advancement. One of the key turning points in the evolution of GPUs was the realization that their parallel processing architecture could be harnessed for more than just rendering visually stunning images. Researchers and developers began to explore the potential of GPUs to accelerate scientific simulations, data analysis, and artificial intelligence tasks.

The shift towards using GPUs for general-purpose computing was fueled by their ability to handle multiple tasks simultaneously, thanks to their thousands of cores. Unlike central processing units (CPUs), which are optimized for sequential processing, GPUs excel at parallel processing, making them ideal for tasks that can be broken down into smaller sub-tasks and executed simultaneously.

Today, GPUs are at the forefront of powering some of the world’s smartest machines, including deep learning systems, autonomous vehicles, and high-performance computing clusters. Companies like NVIDIA, AMD, and Intel have invested heavily in developing GPUs with specialized architectures tailored for AI and machine learning workloads.

In the field of deep learning, GPUs have revolutionized the training of complex neural networks by significantly reducing the time required to process massive amounts of data. Tasks that would have taken weeks to complete on a CPU can now be done in a matter of hours or even minutes using powerful GPU clusters.

Autonomous vehicles rely on GPUs for real-time processing of sensor data, enabling them to make split-second decisions to navigate complex environments safely. The parallel computing capabilities of GPUs are well-suited for handling the massive amounts of data generated by sensors such as cameras, lidar, and radar.

In the realm of high-performance computing, GPUs are instrumental in accelerating scientific research, weather forecasting, and drug discovery. Supercomputers equipped with GPU accelerators can tackle complex simulations and computations with unparalleled speed and efficiency, unlocking new possibilities in various fields of study.

As GPUs continue to push the boundaries of what is possible in computing, the future looks promising for these versatile processors. With advancements in hardware and software optimization, GPUs are poised to play an even greater role in shaping the technologies of tomorrow.

In conclusion, the evolution of GPUs from their humble beginnings in arcade graphics to becoming the driving force behind some of the most advanced machines in the world is a testament to human ingenuity and innovation. As we continue to push the limits of technology, GPUs will undoubtedly remain at the forefront of innovation, powering the next generation of intelligent systems.

graphics processing unit, GPU, artificial intelligence, machine learning, technology