Why AI’s Energy Bottleneck Lies in the Physics of Computation

As generative AI adoption surges, the biggest bottleneck isn’t the model; it’s the chip. Today’s AI models are becoming increasingly sophisticated, capable of powering everything from autonomous vehicles to personalized medical treatments. However, the rapid advancement of artificial intelligence is outpacing the development of the hardware needed to support it. This disconnect is creating an energy bottleneck that threatens to impede the progress of AI innovation.

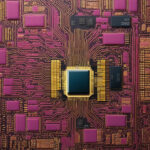

At the heart of this issue lies the physics of computation. As AI models grow larger and more complex, they require exponentially more computational power to train and run efficiently. This demand for computational resources has led to the development of specialized AI chips designed to accelerate machine learning tasks. While these chips have significantly improved the performance of AI systems, they come with a significant energy cost.

The energy consumption of AI chips is a major concern for several reasons. First and foremost, the environmental impact of running massive data centers filled with energy-hungry AI hardware is substantial. The carbon footprint of AI training has been compared to that of the aviation industry, with some estimates suggesting that training a single AI model can produce as much carbon emissions as five cars over their entire lifetime.

In addition to the environmental implications, the energy bottleneck in AI has practical consequences as well. The high energy requirements of AI systems make them prohibitively expensive to run at scale, limiting their accessibility to only the largest tech companies with the resources to support them. This concentration of AI power in the hands of a few key players raises concerns about data privacy, competition, and innovation in the field.

Addressing the energy bottleneck in AI will require a multi-faceted approach that combines advancements in hardware design, algorithm efficiency, and renewable energy sources. One promising area of research is the development of neuromorphic computing, which aims to mimic the brain’s energy-efficient neural networks in silicon. By designing chips that operate more like the human brain, researchers hope to drastically reduce the energy consumption of AI systems while maintaining high performance.

Another key strategy for overcoming the energy bottleneck in AI is to optimize algorithms for efficiency. By streamlining the computational processes involved in training and running AI models, researchers can reduce the overall energy requirements of these systems. Techniques such as quantization, sparsity, and model distillation have shown promise in improving the energy efficiency of AI algorithms without sacrificing performance.

Finally, integrating renewable energy sources into AI infrastructure could help mitigate the environmental impact of these systems. By powering data centers with solar, wind, or hydroelectric energy, tech companies can reduce their carbon footprint and contribute to a more sustainable future for AI development.

In conclusion, the energy bottleneck in AI is a complex and multifaceted challenge that requires a coordinated effort from researchers, industry leaders, and policymakers to address. By investing in energy-efficient hardware, optimizing algorithms for efficiency, and embracing renewable energy sources, we can pave the way for a more sustainable and accessible future for artificial intelligence.

energy efficiency, AI hardware, computational physics, sustainable technology, algorithm optimization