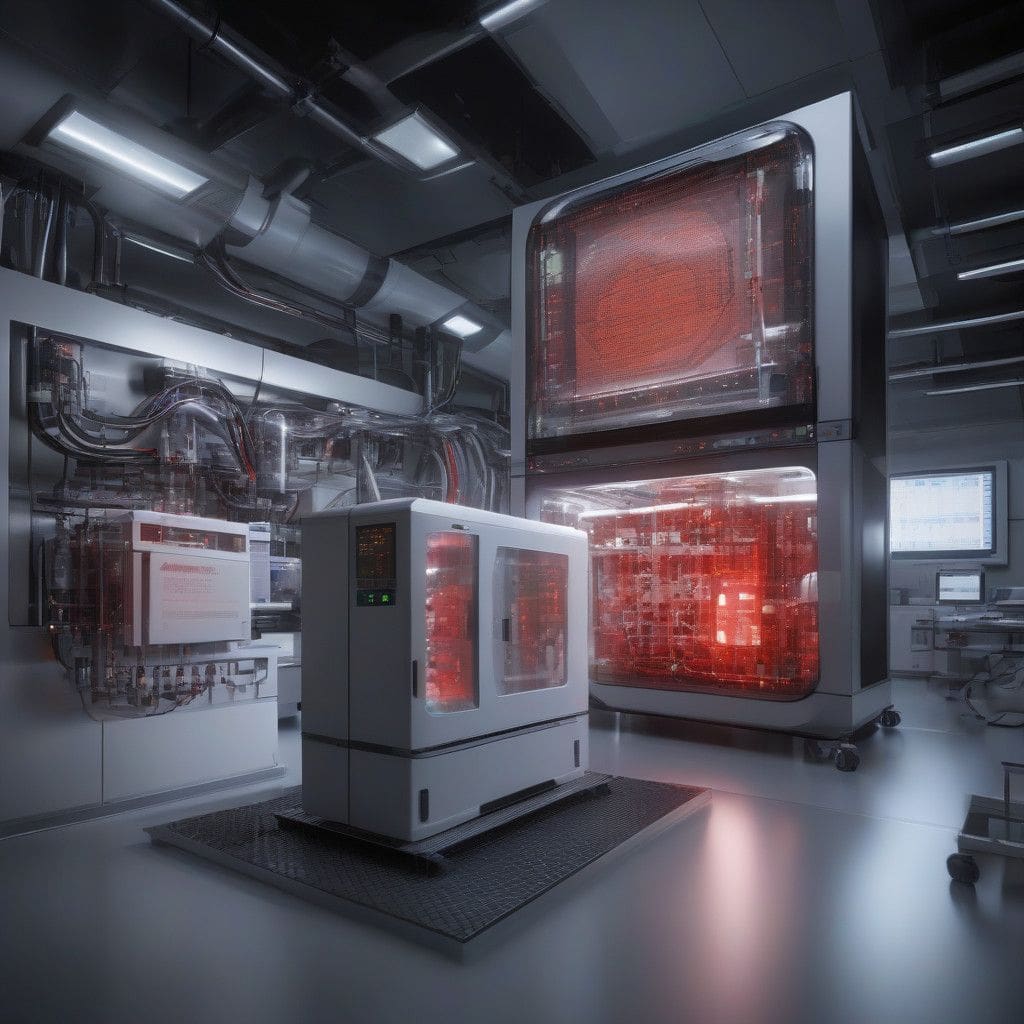

Nvidia is currently navigating a complex landscape of challenges related to its highly anticipated Blackwell AI chips. As demand for AI technology surges, companies eagerly await the deployment of these chips, critical for training large-scale AI models. However, concerns regarding overheating in custom server racks, essential components for these chips, have sparked discussions among stakeholders.

The Blackwell architecture, designed to optimize performance for AI tasks, was originally scheduled for a smoother rollout. Yet, as shipments approach, Nvidia has faced scrutiny over the engineering processes required to incorporate these advanced systems within diverse data center environments. Each custom server rack can accommodate an impressive 72 AI chips, but multiple design revisions have emerged late in the manufacturing process, raising red flags.

One noteworthy collaboration arises with Dell, which is currently shipping Nvidia’s GB200 NVL72 server racks to early adopters such as CoreWeave. Nvidia describes its iterative engineering approach as standard procedure, aimed at addressing the integration of Blackwell chips into real-world environments. This collaborative effort is expected to ensure successful deployments, a crucial factor in maintaining customer confidence.

Despite these strides, the backdrop of Nvidia’s challenges includes past production delays linked to design flaws. CEO Jensen Huang has openly discussed these issues, acknowledging that low production yields presented hurdles that required significant joint efforts with the Taiwan Semiconductor Manufacturing Company (TSMC). The resolution of these design flaws is paramount, as such problems not only slow down progress but also influence Nvidia’s reputation in the competitive AI market.

As the company prepares to release its fiscal third-quarter earnings, financial analysts project staggering figures—anticipated revenue stands at $33 billion with a net income of $17.4 billion. This impressive forecast underscores the market’s optimism regarding Nvidia’s AI capabilities, despite a slight dip in stock prices observed earlier in the week. Notably, Nvidia’s shares have appreciated significantly—by a remarkable 187% throughout the year—demonstrating robust investor confidence in its AI-driven future.

Nvidia’s strategic pivots during this turbulent period will play a critical role in shaping its long-term trajectory. While the challenges surrounding overheating present potential roadblocks, the company’s readiness to innovate and improve upon its designs can be seen as a marker of resilience. The collaboration with major cloud service providers and ongoing product iterations signify that Nvidia is not only focused on meeting its immediate deadlines but also on cementing its position as a leader in the burgeoning AI sector.

The path forward for Nvidia and Blackwell AI chips will depend on how well it addresses these pressing issues. With competition intensifying and the stakes higher than ever, a successful launch could solidify Nvidia’s standing as a powerhouse in AI technology.

For industry stakeholders, the implications of Nvidia’s advancements are profound. The success of the Blackwell chips could facilitate breakthroughs in various sectors reliant on powerful AI computing, from healthcare to autonomous vehicles. As the real-world applications of AI continue to expand, the performance and reliability of chips like Blackwell become central to achieving ambitious technological goals.

In conclusion, Nvidia’s journey with its Blackwell AI chips is a crucial watchpoint for anyone invested in technology and innovation. The hurdles encountered and overcome during this process paint a picture of a dynamic and demanding landscape where precision and performance are non-negotiable commodities. The integration of these chips into the market will not only affect Nvidia’s financial growth but also influence the broader technological ecosystem.