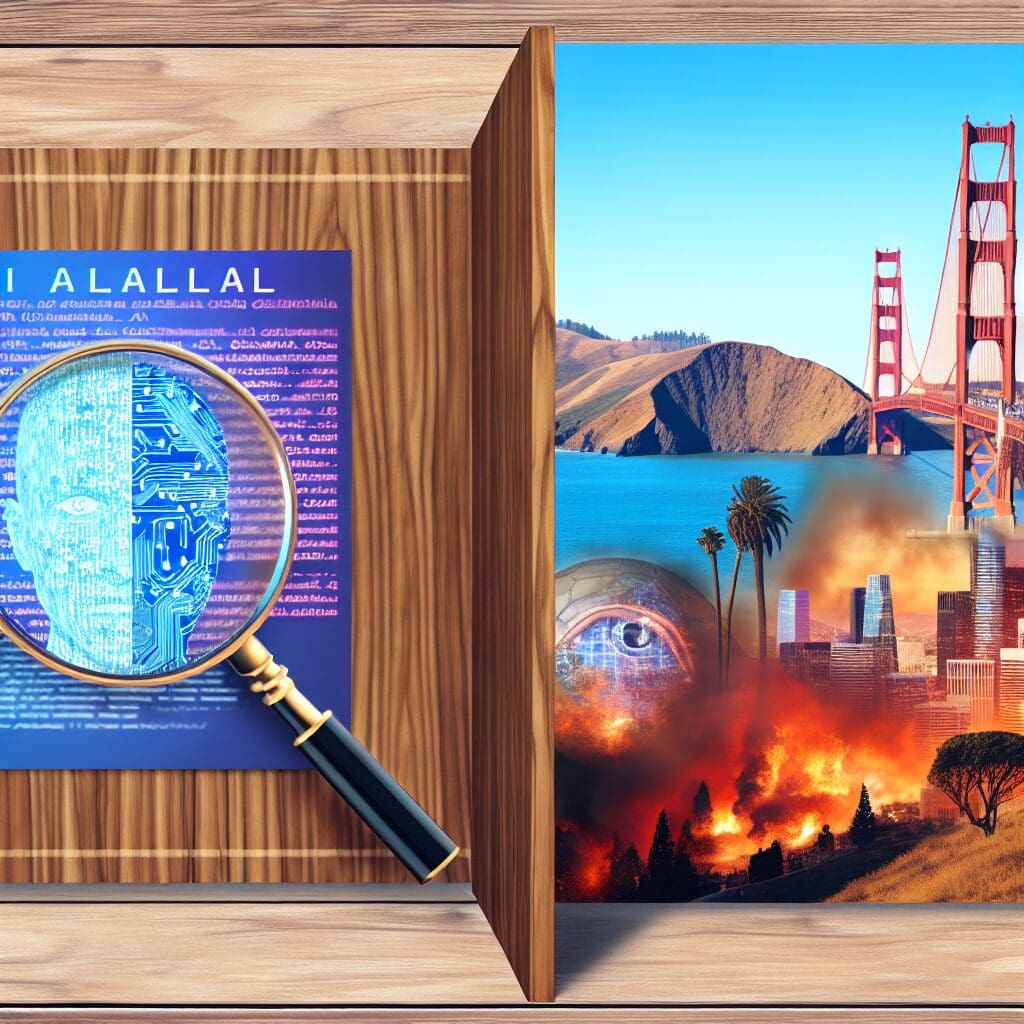

In recent discussions around artificial intelligence regulation, California has emerged at the forefront with its proposed SB 1047 bill, aimed at establishing stricter controls on large AI models. This initiative seeks to preemptively address potential harms associated with AI technologies. Advocates argue that regulation is necessary to prevent catastrophic outcomes, but critics from the tech community raise concerns over innovation stifling.

The bill has a dual focus: ensuring the safety of AI applications while also paving a pathway for increased transparency. By mandating companies to disclose their AI training data and model architectures, the bill intends to hold tech giants accountable for their systems’ decisions. For example, if an AI system were to guide healthcare algorithms that incorrectly diagnose patients, transparency could help trace back the issue to its source.

However, the resistance from major players in the tech industry cannot be overlooked. Companies argue that the requirements outlined in the bill could hinder competitiveness and dissuade future investments in California’s innovation landscape. According to a recent report, tech leaders emphasized that excessive regulation could leave policy gaps as technologies evolve rapidly.

Nonetheless, the necessity for a balanced approach remains clear. Striking a compromise that safeguards interests while promoting technological advancement is crucial. With ongoing debates, this California regulation could set a precedent for similar initiatives worldwide, making it a significant matter of discussion for business leaders and policymakers alike. As the conversation progresses, stakeholders will need to consider how best to align safety with innovation.