In the rapidly expanding realm of artificial intelligence, efficiency is a key concern. Recent research has brought to light critical insights about quantization, a technique intended to streamline AI systems by reducing the precision of model parameters. While this approach can save both computational resources and memory, it also poses risks that can significantly affect performance. Understanding these risks is vital for businesses and organizations that rely on AI technologies, as they navigate the fine line between efficiency and effectiveness.

Quantization operates by transforming floating-point numbers into lower-precision formats. This technique enables AI models to consume less memory and compute power, making them more accessible for various applications, particularly in mobile devices and edge computing environments. For instance, a standard neural network model which may require significant data resources can be compressed through quantization, allowing for quicker data processing without extensive hardware.

However, the efficiency gained from quantization isn’t limitless. Recent analysis has suggested that while quantization can enhance computational efficiency, it does not scale well with larger, highly sophisticated models. As models grow in complexity and size, the degradation in performance becomes more pronounced—essentially negating the benefits of quantization. This phenomenon raises concern for organizations that depend heavily on AI systems for their operational success.

A practical example lies in the field of machine learning, where algorithms trained on massive datasets begin to show signs of performance dips when quantization techniques are applied inappropriately. Researchers at various tech firms have found that attempting to quantize highly complex models often results in a trade-off: a significant reduction in accuracy and reliability. For instance, a deep learning model designed for image recognition may become less capable of discerning between similar objects if its precision is decreased too drastically. This can lead to increased error rates, which is concerning for industries where precision is paramount, like healthcare and autonomous driving.

One noteworthy case involved a prominent AI-driven image classification tool that implemented quantization to improve its processing time. While the model initially demonstrated faster response times, the loss in accuracy became evident as it misclassified images—a critical misstep in any application requiring accurate results. This incident underscores the necessity for organizations to thoroughly evaluate not just the computational benefits of quantization, but also the implications on overall model performance.

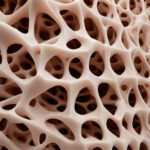

Understanding how quantization affects AI systems requires a deeper analysis of the neural networks involved. These systems are typically trained on extensive datasets with significant context. Altering the precision of such models can diminish their capacity to understand intricate patterns, thereby leading to poorer decision-making. This is particularly relevant when deploying AI solutions at scale, where even minor errors can compound into larger issues.

Moreover, the evaluation of quantization shouldn’t occur in isolation. Organizations need a comprehensive strategy to monitor and assess the effects of applying these techniques across their AI systems. Data validation and performance testing become crucial steps in this process. Implementing dynamic monitoring can serve as a safeguard against the unintended consequences of applying quantization too aggressively, allowing businesses to strike a balance between efficiency and performance fidelity.

As businesses continue to leverage AI, the findings around quantization advocate for an approach that combines efficiency with oversight. By equipping teams with the knowledge and tools to assess quantization effects, organizations can navigate this complex landscape more effectively. This is an imperative for industries where precision and competence are not just preferences but essential components for success.

As we continue to advance in the field of artificial intelligence, understanding the limitations of efficiency techniques like quantization will be vital. Companies need to consider the long-term implications of sacrificing performance for speed and resource-saving measures. Thorough testing, validation, and a keen eye on model performance will ensure that AI systems not only operate efficiently but also deliver the reliable, accurate outcomes essential for maintaining competitiveness.

In summary, while quantization may present an enticing path toward enhanced efficiency in artificial intelligence, its risks must be carefully managed to ensure optimal performance. Striking the right balance will determine how successfully businesses can harness AI technologies in the future.